The EX380 exam was one of the more difficult exams I took with OpenShift - it took me about three times before I was able to pass the exam. However, while there are some objectives took number of times to figure out, log forwarding was not one of them. In fact, I got that figured out consistently in every exam. So I was pretty eager to reproduce it on my OKD cluster.

Except I couldn't, at least initially. For a while, I thought that the upstream version is not available as an operator, so after searching, I asked on the #opennshift-users on kubenetes slack on what logging solutions users put on their OKD clusters:

- Me: For OKD users: What do you use for log forwarding? I thought about using whatever upstream version that Red Hat Logging uses, but it seems a bit too complete to deploy for my little home lab, so I am interested to hearing alternatives.

- Somebody: I’m using OKD Logging Operator, then the Cluster Log Forwarder and forwarding to a self hosted Vector instanc

- Me: Is thisthe OKD logging operator that you are referring to or is there another that I somehow missed?

- Somebody: no, I’m using the okderators catalog. Then installed the OKD Logging Operator, then on its Overview page you see the Cluster Log Forwarder tab. In here, I’ve created a new one (of type http, method POST with username and password via https url).

I feel dumb, because previously I setup Tekton pipelines from source and had I know that a specially curated version for OKD was available, I would have used that and saved myself the work.

Needless to say, I'll will redo my tekton setup. But that is for another time. For now, we'll walk through the setup of forwarding OpenShift/OKD logs over to Syslog.

Initial Setup

First, a disclaimer:

THE FOLLOWING IS A MINIMAL WORKING EXAMPLE AND SHOULD NOT BE USED IN PRODUCTION WITHOUT REVIEW AND REVISION.

Now we proceed.

First, we will install the OKerators catalog.

➜ blog.example.com git:(master) ✗ curl -s https://raw.githubusercontent.com/okd-project/okderators-catalog-index/refs/heads/release-4.19/hack/install-catalog.sh | bash

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 985 100 985 0 0 9115 0 --:--:-- --:--:-- --:--:-- 9120

catalogsource.operators.coreos.com/okderators created

Then we created the namespace:

➜ blog.example.com git:(master) oc create ns openshift-logging

namespace/openshift-logging created

Then we will rename the namespace, because we also want to enable metrics on our clusters.

➜ blog.example.com git:(master) oc label ns openshift-logging openshift.io/cluster-monitoring="true"

namespace/openshift-logging labeled

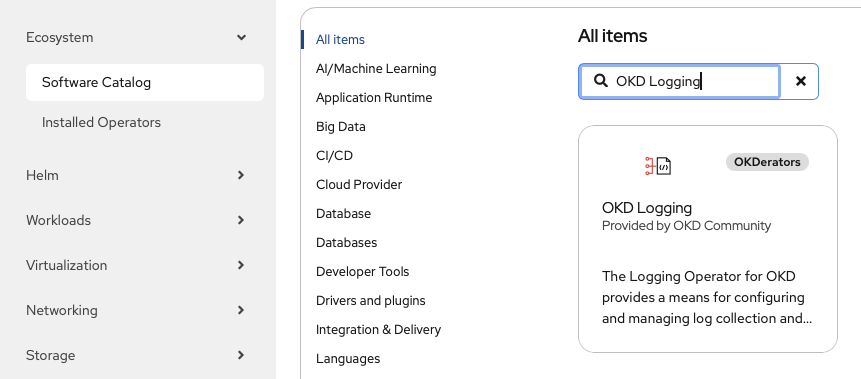

With that done, we will log into the OKD console and look for OKD logging in the Software Catalog:

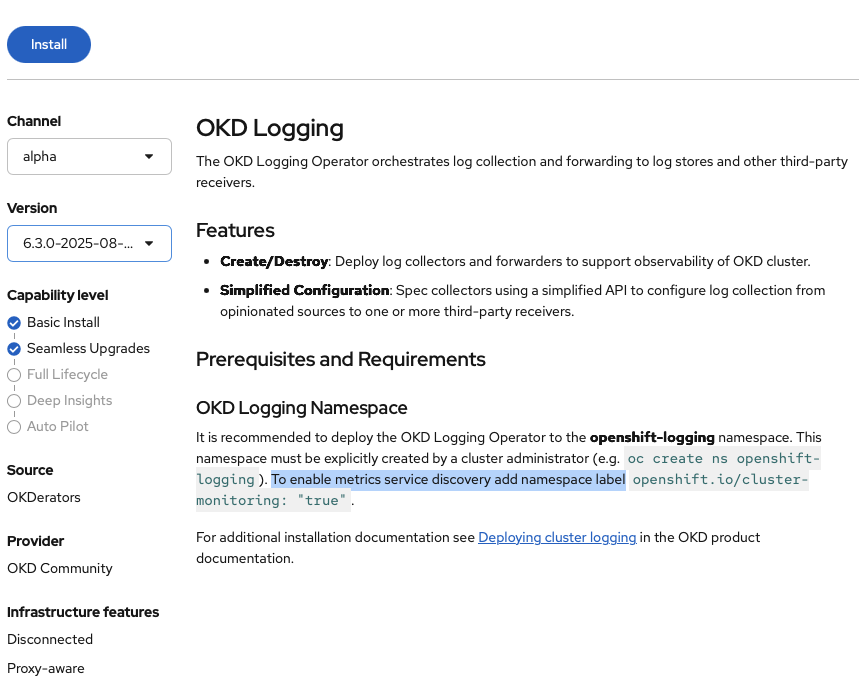

And then going to the page:

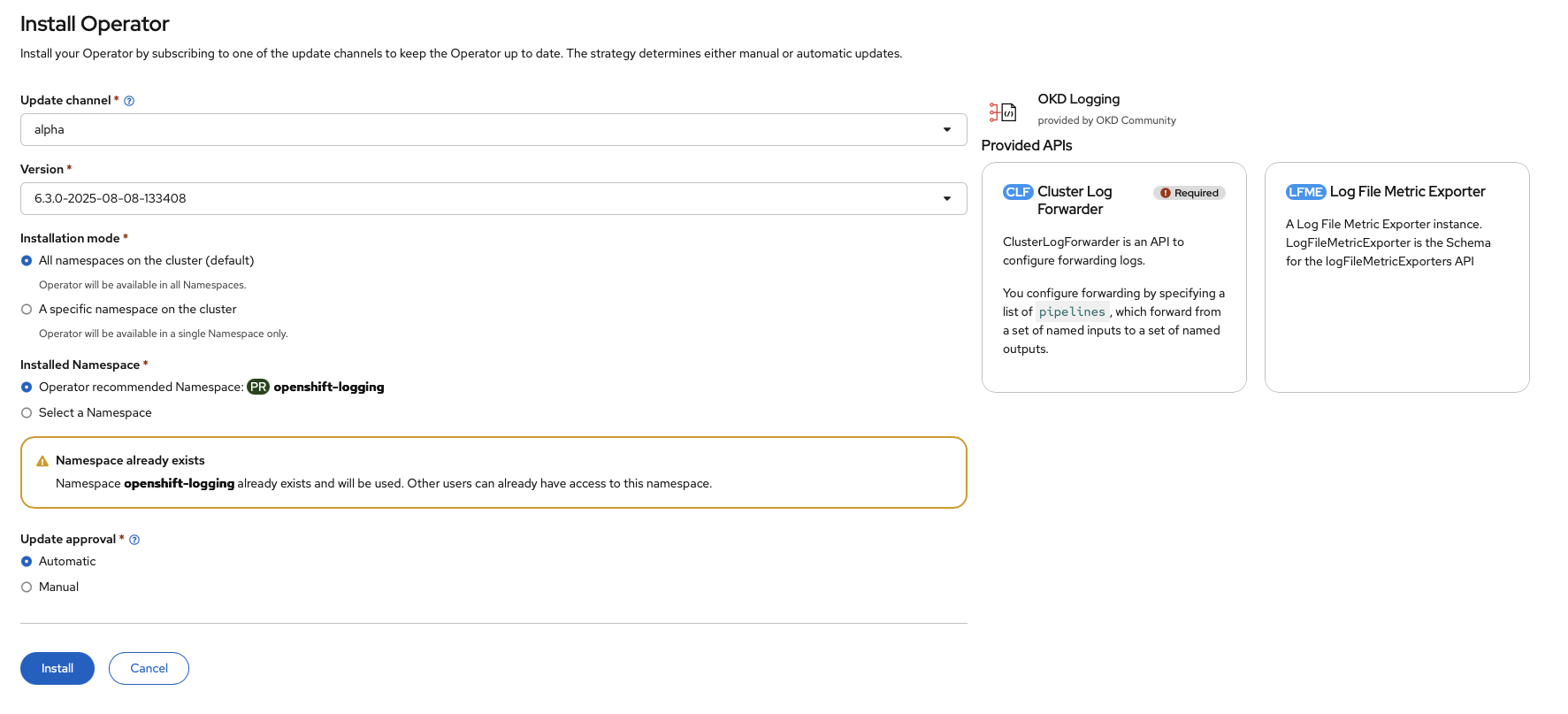

We begin the installation, accepting all the defaults:

We wait until it is done:

We could setup the forwarader on this screen, but we won't. Instead, we will do the rest via code.

At this point, we should verify that we have it all setup:

➜ blog.example.com git:(master) ✗ oc get csv -n openshift-logging

NAME DISPLAY VERSION REPLACES PHASE

cert-manager.v1.16.5 cert-manager 1.16.5 cert-manager.v1.16.1 Succeeded

cluster-logging.v6.3.0-2025-08-08-133408 OKD Logging 6.3.0-2025-08-08-133408 cluster-logging.v6.2.0-2025-05-26-200125 Succeeded

kubernetes-nmstate-operator.v0.85.1 Kubernetes Nmstate 0.85.1 kubernetes-nmstate-operator.v0.47.0 Succeeded

kubevirt-hyperconverged-operator.v1.16.0 KubeVirt HyperConverged Cluster Operator 1.16.0 kubevirt-hyperconverged-operator.v1.15.2 Succeeded

Now we are ready to setup the log forwarder:

Log Forwarder Deployment

Prior to creating the log forwarder, we need to create a service account with permimssions to forward log. We apply this manifest:

apiVersion: v1

kind: ServiceAccount

metadata:

name: syslog-forwarder-sa

namespace: openshift-logging

Once we create the service account, we applied the following permissions:

oc adm policy add-cluster-role-to-user collect-application-logs system:serviceaccount:openshift-logging:syslog-forwarder-sa

oc adm policy add-cluster-role-to-user collect-infrastructure-logs system:serviceaccount:openshift-logging:syslog-forwarder-sa

oc adm policy add-cluster-role-to-user collect-audit-logs system:serviceaccount:openshift-logging:syslog-forwarder-sa

Now we can created the cluster log forwarder. The cluster forwarder will forward app, audit and infrastructure events to the syslog server at 172.16.1.35:

apiVersion: observability.openshift.io/v1

kind: ClusterLogForwarder

metadata:

name: syslog-forwarder-homelab

namespace: openshift-logging

spec:

collection:

logs:

type: Vector # Weirdly enough, this is accepted even though it is not documented

resources:

limits:

memory: 736Mi

requests:

cpu: 100m

memory: 736Mi

serviceAccount:

name: "syslog-forwarder-sa"

outputs:

- name: rsyslog-audit

type: syslog

syslog:

msgID: audit

rfc: RFC5424

severity: debug

url: 'tcp://172.16.1.35:514'

- name: rsyslog-infra

type: syslog

syslog:

msgID: infra

rfc: RFC5424

severity: debug

url: 'tcp://172.16.1.35:514'

- name: rsyslog-app

type: syslog

syslog:

msgID: app

rfc: RFC5424

severity: debug

url: 'tcp://172.16.1.35:514'

pipelines:

- name: rsyslog-audit

inputRefs:

- audit

outputRefs:

- rsyslog-audit

- name: rsyslog

inputRefs:

- infrastructure

outputRefs:

- rsyslog-infra

- name: rsyslog-app

inputRefs:

- application

outputRefs:

- rsyslog-app

It took a bit get it working. It is not until I looked at the API in the cluster and found that it has changed since 4.14 (which is the version I tested): . Instead:

- You do not use

apiVersion: logging.openshift.io/v1with the current OpenShift/OKD release, but instead useapiVersion: observability.openshift.io/v1. - You must setup a service account prior to and it must be

serviceAccount, notserviceAccountNameas the service account doesn't get created automatically.

(which is why we created the service account before we setup the Cluster Log Forwarder)

With the manifest created, we applied it:

➜ blog.example.com git:(master) ✗ oc apply -f ~/src/home_lab/okd/cluster_log_forwarder.yaml

clusterlogforwarder.observability.openshift.io/instance created

We verified that the instances are launched (or in process of launching):

➜ blog.example.com git:(master) ✗ oc get pods -o wide -n openshift-loggin

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cluster-logging-operator-7ffb497f84-j2k8t 1/1 Running 0 112m 10.130.0.5 worker00.node.example.com <none> <none>

syslog-forwarder-homelab-6szm4 1/1 Running 8 129m 10.131.1.227 worker01.node.example.com <none> <none>

syslog-forwarder-homelab-74dtk 1/1 Running 1 3h14m 10.129.1.230 control02.node.example.com <none> <none>

syslog-forwarder-homelab-7b6l8 1/1 Running 10 (2m4s ago) 129m 10.130.0.41 worker00.node.example.com <none> <none>

syslog-forwarder-homelab-djhs6 1/1 Running 1 3h14m 10.128.3.126 control01.node.example.com <none> <none>

syslog-forwarder-homelab-l2snt 0/1 CrashLoopBackOff 18 (102s ago) 75m 10.131.2.35 worker04.node.example.com <none> <none>

syslog-forwarder-homelab-l8kdl 1/1 Running 11 (85m ago) 130m 10.129.2.37 worker02.node.example.com <none> <none>

syslog-forwarder-homelab-qgntb 1/1 Running 1 3h14m 10.128.0.162 control00.node.example.com <none> <none>

syslog-forwarder-homelab-vr7zn 1/1 Running 18 (5m26s ago) 75m 10.130.2.22 worker03.node.example.com <none> <none>

Event Router Setup

Finally, we setup the event router, which used to forward Kubernetees events with this OpenShift template:

apiVersion: template.openshift.io/v1

kind: Template

metadata:

name: eventrouter-template

annotations:

description: "A pod forwarding kubernetes events to OpenShift Logging stack."

tags: "events,EFK,logging,cluster-logging"

objects:

- kind: ServiceAccount

apiVersion: v1

metadata:

name: eventrouter

namespace: ${NAMESPACE}

- kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: event-reader

rules:

- apiGroups: [""]

resources: ["events"]

verbs: ["get", "watch", "list"]

- kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: event-reader-binding

subjects:

- kind: ServiceAccount

name: eventrouter

namespace: ${NAMESPACE}

roleRef:

kind: ClusterRole

name: event-reader

- kind: ConfigMap

apiVersion: v1

metadata:

name: eventrouter

namespace: ${NAMESPACE}

data:

config.json: |-

{

"sink": "stdout"

}

- kind: Deployment

apiVersion: apps/v1

metadata:

name: eventrouter

namespace: ${NAMESPACE}

labels:

component: "eventrouter"

logging-infra: "eventrouter"

provider: "openshift"

spec:

selector:

matchLabels:

component: "eventrouter"

logging-infra: "eventrouter"

provider: "openshift"

replicas: 1

template:

metadata:

labels:

component: "eventrouter"

logging-infra: "eventrouter"

provider: "openshift"

name: eventrouter

spec:

serviceAccount: eventrouter

containers:

- name: kube-eventrouter

image: ${IMAGE}

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: ${CPU}

memory: ${MEMORY}

volumeMounts:

- name: config-volume

mountPath: /etc/eventrouter

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop: ["ALL"]

securityContext:

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

volumes:

- name: config-volume

configMap:

name: eventrouter

parameters:

- name: IMAGE

displayName: Image

value: "quay.io/openshift-logging/eventrouter:latest"

- name: CPU

displayName: CPU

value: "100m"

- name: MEMORY

displayName: Memory

value: "128Mi"

- name: NAMESPACE

displayName: Namespace

value: "openshift-logging"

(I read someplace that it is deprecated and it looks like it was taken out of the recent Red hat logging documentation, but I could not find an alternative, so we are going with this for now)

Putting that aside, we applied the manifest

➜ blog.example.com git:(master) ✗ oc process -f ~/src/home_lab/okd/event_router.yaml | oc apply -n openshift-logging -f -

serviceaccount/eventrouter created

clusterrole.rbac.authorization.k8s.io/event-reader created

clusterrolebinding.rbac.authorization.k8s.io/event-reader-binding created

configmap/eventrouter created

deployment.apps/eventrouter created

And confirmed that it is running:

➜ blog.example.com git:(master) ✗ oc get pods --selector component=eventrouter -o name -n openshift-logging

pod/eventrouter-f665f9db7-46rs7

➜ blog.example.com git:(master) ✗

Verification

We logged into the syslog server and confirmed that it is receiving the events. Here is the UI:

And here is the output from the cli

root@nas01:/volume1/.@root # tail -f /var/log/messages

2025-12-12T18:11:40.420794-06:00 control01.node.example.com ntent@sha256: b9bfbd59e7f5e692935c21488a7106bb66b69c8eb9de5713d788476448b7d0cc\",\"command\":[\"cluster-kube-scheduler-operator\",\"cert-syncer\"],\"args\":[\"--kubeconfig=/etc/kubernetes/static-pod-resources/configmaps/kube-scheduler-cert-syncer-kubeconfig/kubeconfig\",\"--namespace=$(POD_NAMESPACE)\",\"--destination-dir=/etc/kubernetes/static-pod-certs\"],\"env\":[{\"name\":\"POD_NAME\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.name\"}}},{\"name\":\"POD_NAMESPACE\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.namespace\"}}}],\"resources\":{\"requests\":{\"cpu\":\"5m\",\"memory\":\"50Mi\"}},\"volumeMounts\":[{\"name\":\"tmp\",\"mountPath\":\"/tmp\"},{\"name\":\"resource-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-resources\"},{\"name\":\"cert-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-certs\"}],\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}},{\"name\":\"kube-scheduler-recovery-controller\",\"image\":\"quay.io/okd/scos-content@sha256:b9bfbd59e7f5e692935c21488a7106bb66b69c8eb9de5713d788476448b7d0cc\",\"command\":[\"/bin/bash\",\"-euxo\",\"pipefail\",\"-c\"],\"args\":[\"timeout 3m /bin/bash -exuo pipefail -c 'while [ -n \\\"$(ss -Htanop \\\\( sport = 11443 \\\\))\\\" ]; do sleep 1; done'\\n\\nexec cluster-kube-scheduler-operator cert-recovery-controller --kubeconfig=/etc/kubernetes/static-pod-resources/configmaps/kube-scheduler-cert-syncer-kubeconfig/kubeconfig --namespace=${POD_NAMESPACE} --listen=0.0.0.0:11443 -v=2\\n\"],\"env\":[{\"name\":\"POD_NAMESPACE\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.namespace\"}}}],\"resources\":{\"requests\":{\"cpu\":\"5m\",\"memory\":\"50Mi\"}},\"volumeMounts\":[{\"name\":\"tmp\",\"mountPath\":\"/tmp\"},{\"name\":\"resource-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-resources\"},{\"name\":\"cert-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-certs\"}],\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}}],\"hostNetwork\":true,\"tolerations\":[{\"operator\":\"Exists\"}],\"priorityClassName\":\"system-node-critical\"},\"status\":{}}","openshift":{"cluster_id":"f5db0d9f-b567-4cc7-a5b1-1f0f4504e63d","sequence":1765584699621738136},"timestamp":"2025-12-11T18:38:39.573343402Z"}

2025-12-12T18:11:40.420794-06:00 control01.node.example.com ntent@sha256: b9bfbd59e7f5e692935c21488a7106bb66b69c8eb9de5713d788476448b7d0cc\",\"command\":[\"cluster-kube-scheduler-operator\",\"cert-syncer\"],\"args\":[\"--kubeconfig=/etc/kubernetes/static-pod-resources/configmaps/kube-scheduler-cert-syncer-kubeconfig/kubeconfig\",\"--namespace=$(POD_NAMESPACE)\",\"--destination-dir=/etc/kubernetes/static-pod-certs\"],\"env\":[{\"name\":\"POD_NAME\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.name\"}}},{\"name\":\"POD_NAMESPACE\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.namespace\"}}}],\"resources\":{\"requests\":{\"cpu\":\"5m\",\"memory\":\"50Mi\"}},\"volumeMounts\":[{\"name\":\"tmp\",\"mountPath\":\"/tmp\"},{\"name\":\"resource-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-resources\"},{\"name\":\"cert-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-certs\"}],\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}},{\"name\":\"kube-scheduler-recovery-controller\",\"image\":\"quay.io/okd/scos-content@sha256:b9bfbd59e7f5e692935c21488a7106bb66b69c8eb9de5713d788476448b7d0cc\",\"command\":[\"/bin/bash\",\"-euxo\",\"pipefail\",\"-c\"],\"args\":[\"timeout 3m /bin/bash -exuo pipefail -c 'while [ -n \\\"$(ss -Htanop \\\\( sport = 11443 \\\\))\\\" ]; do sleep 1; done'\\n\\nexec cluster-kube-scheduler-operator cert-recovery-controller --kubeconfig=/etc/kubernetes/static-pod-resources/configmaps/kube-scheduler-cert-syncer-kubeconfig/kubeconfig --namespace=${POD_NAMESPACE} --listen=0.0.0.0:11443 -v=2\\n\"],\"env\":[{\"name\":\"POD_NAMESPACE\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.namespace\"}}}],\"resources\":{\"requests\":{\"cpu\":\"5m\",\"memory\":\"50Mi\"}},\"volumeMounts\":[{\"name\":\"tmp\",\"mountPath\":\"/tmp\"},{\"name\":\"resource-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-resources\"},{\"name\":\"cert-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-certs\"}],\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}}],\"hostNetwork\":true,\"tolerations\":[{\"operator\":\"Exists\"}],\"priorityClassName\":\"system-node-critical\"},\"status\":{}}","openshift":{"cluster_id":"f5db0d9f-b567-4cc7-a5b1-1f0f4504e63d","sequence":1765584699622098630},"timestamp":"2025-12-11T18:38:39.576777195Z"}

2025-12-12T18:11:46.224612-06:00 nas01 sshd-session[10083]: Postponed keyboard-interactive/pam for rilindo from 192.168.1.114 port 60760 ssh2 [preauth]

2025-12-12T18:11:46.275077-06:00 nas01 sshd-session[10083]: lastlog_openseek: Couldn't stat /var/log/lastlog: No such file or directory

2025-12-12T18:11:46.275445-06:00 nas01 sshd-session[10083]: lastlog_openseek: Couldn't stat /var/log/lastlog: No such file or directory

2025-12-12T18:11:46.258910-06:00 nas01 sshd-session[10083]: add 192.168.1.114 login 1 to ipblockman 0

2025-12-12T18:11:46.258970-06:00 nas01 sshd-session[10083]: Accepted keyboard-interactive/pam for rilindo from 192.168.1.114 port 60760 ssh2

2025-12-12T18:11:51.455738-06:00 nas01 su: + pts/2 rilindo:root

2025-12-12T18:11:53.110353-06:00 control01.node.example.com : {\"path\":\"livez?exclude=etcd\",\"port\":6443,\"scheme\":\"HTTPS\"},\"timeoutSeconds\":10,\"periodSeconds\":10,\"successThreshold\":1,\"failureThreshold\":3},\"readinessProbe\":{\"httpGet\":{\"path\":\"readyz\",\"port\":6443,\"scheme\":\"HTTPS\"},\"timeoutSeconds\":10,\"periodSeconds\":5,\"successThreshold\":1,\"failureThreshold\":3},\"startupProbe\":{\"httpGet\":{\"path\":\"livez\",\"port\":6443,\"scheme\":\"HTTPS\"},\"timeoutSeconds\":10,\"periodSeconds\":5,\"successThreshold\":1,\"failureThreshold\":30},\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"privileged\":true,\"readOnlyRootFilesystem\":true}},{\"name\":\"kube-apiserver-cert-syncer\",\"image\":\"quay.io/okd/scos-content@sha256:4691db53ffa1f2cb448123d1110edaab2a7bdcdf9d6db0789144f24f325af68c\",\"command\":[\"cluster-kube-apiserver-operator\",\"cert-syncer\"],\"args\":[\"--kubeconfig=/etc/kubernetes/static-pod-resources/configmaps/kube-apiserver-cert-syncer-kubeconfig/kubeconfig\",\"--namespace=$(POD_NAMESPACE)\",\"--destination-dir=/etc/kubernetes/static-pod-certs\"],\"env\":[{\"name\":\"POD_NAME\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.name\"}}},{\"name\":\"POD_NAMESPACE\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.namespace\"}}}],\"resources\":{\"requests\":{\"cpu\":\"5m\",\"memory\":\"50Mi\"}},\"volumeMounts\":[{\"name\":\"resource-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-resources\"},{\"name\":\"cert-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-certs\"},{\"name\":\"tmp-dir\",\"mountPath\":\"/tmp\"}],\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}},{\"name\":\"kube-apiserver-cert-regeneration-controller\",\"image\":\"quay.io/okd/scos-content@sha256:4691db53ffa1f2cb448123d1110edaab2a7bdcdf9d6db0789144f24f325af68c\",\"command\":[\"cluster-kube-apiserver-operator\",\"cert-regeneration-controller\"],\"args\":[\"--kubeconfig=/etc/kubernetes/static-pod-resources/configmaps/kube-apiserver-cert-syncer-kubeconfig/kubeconfig\",\"--namespace=$(POD_NAMESPACE)\",\"-v=2\"],\"env\":[{\"name\":\"POD_NAMESPACE\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.namespace\"}}},{\"name\":\"OPERATOR_IMAGE_VERSION\",\"value\":\"4.20.0-okd-scos.11\"}],\"resources\":{\"requests\":{\"cpu\":\"5m\",\"memory\":\"50Mi\"}},\"volumeMounts\":[{\"name\":\"resource-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-resources\"},{\"name\":\"tmp-dir\",\"mountPath\":\"/tmp\"}],\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}},{\"name\":\"kube-apiserver-insecure-readyz\",\"image\":\"quay.io/okd/scos-content@sha256:4691db53ffa1f2cb448123d1110edaab2a7bdcdf9d6db0789144f24f325af68c\",\"command\":[\"cluster-kube-apiserver-operator\",\"insecure-readyz\"],\"args\":[\"--insecure-port=6080\",\"--delegate-url=https://localhost:6443/readyz\"],\"ports\":[{\"containerPort\":6080}],\"resources\":{\"requests\":{\"cpu\":\"5m\",\"memory\":\"50Mi\"}},\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}},{\"name\":\"kube-apiserver-check-endpoints\",\"image\":\"quay.io/okd/scos-content@sha256:4691db53ffa1f2cb448123d1110edaab2a7bdcdf9d6db0789144f24f325af68c\",\"command\":[\"cluster-kube-apiserver-operator\",\"check-endpoints\"],\"args\":[\"--kubeconfig\",\"/etc/kubernetes/static-pod-certs/configmaps/check-endpoints-kubeconfig/kubeconfig\",\"--listen\",\"0.0.0.0:17697\",\"--namespace\",\"$(POD_NAMESPACE)\",\"--v\",\"2\"],\"ports\":[{\"name\":\"check-endpoints\",\"hostPort\":17697,\"containerPort\":17697,\"protocol\":\"TCP\"}],\"env\":[{\"name\":\"POD_NAME\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.name\"}}},{\"name\":\"POD_NAMESPACE\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.namespace\"}}}],\"resources\":{\"requests\":{\"cpu\":\"10m\",\"memory\":\"50Mi\"}},\"volumeMounts\":[{\"name\":\"resource-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-resources\"},{\"name\":\"cert-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-certs\"},{\"name\":\"tmp-dir\",\"mountPath\":\"/tmp\"}],\"livenessProbe\":{\"httpGet\":{\"path\":\"healthz\",\"port\":17697,\"scheme\":\"HTTPS\"},\"initialDelaySeconds\":10,\"timeoutSeconds\":10},\"readinessProbe\":{\"httpGet\":{\"path\":\"healthz\",\"port\":17697,\"scheme\":\"HTTPS\"},\"initialDelaySeconds\":10,\"timeoutSeconds\":10},\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}}],\"terminationGracePeriodSeconds\":135,\"hostNetwork\":true,\"tolerations\":[{\"operator\":\"Exists\"}],\"priorityClassName\":\"system-node-critical\",\"priority\":2000001000},\"status\":{}}","openshift":{"cluster_id":"f5db0d9f-b567-4cc7-a5b1-1f0f4504e63d","sequence":1765584712067663375},"timestamp":"2025-12-10T23:56:13.601967604Z"}

2025-12-12T18:11:53.110353-06:00 control01.node.example.com : {\"path\":\"livez?exclude=etcd\",\"port\":6443,\"scheme\":\"HTTPS\"},\"timeoutSeconds\":10,\"periodSeconds\":10,\"successThreshold\":1,\"failureThreshold\":3},\"readinessProbe\":{\"httpGet\":{\"path\":\"readyz\",\"port\":6443,\"scheme\":\"HTTPS\"},\"timeoutSeconds\":10,\"periodSeconds\":5,\"successThreshold\":1,\"failureThreshold\":3},\"startupProbe\":{\"httpGet\":{\"path\":\"livez\",\"port\":6443,\"scheme\":\"HTTPS\"},\"timeoutSeconds\":10,\"periodSeconds\":5,\"successThreshold\":1,\"failureThreshold\":30},\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"privileged\":true,\"readOnlyRootFilesystem\":true}},{\"name\":\"kube-apiserver-cert-syncer\",\"image\":\"quay.io/okd/scos-content@sha256:4691db53ffa1f2cb448123d1110edaab2a7bdcdf9d6db0789144f24f325af68c\",\"command\":[\"cluster-kube-apiserver-operator\",\"cert-syncer\"],\"args\":[\"--kubeconfig=/etc/kubernetes/static-pod-resources/configmaps/kube-apiserver-cert-syncer-kubeconfig/kubeconfig\",\"--namespace=$(POD_NAMESPACE)\",\"--destination-dir=/etc/kubernetes/static-pod-certs\"],\"env\":[{\"name\":\"POD_NAME\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.name\"}}},{\"name\":\"POD_NAMESPACE\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.namespace\"}}}],\"resources\":{\"requests\":{\"cpu\":\"5m\",\"memory\":\"50Mi\"}},\"volumeMounts\":[{\"name\":\"resource-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-resources\"},{\"name\":\"cert-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-certs\"},{\"name\":\"tmp-dir\",\"mountPath\":\"/tmp\"}],\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}},{\"name\":\"kube-apiserver-cert-regeneration-controller\",\"image\":\"quay.io/okd/scos-content@sha256:4691db53ffa1f2cb448123d1110edaab2a7bdcdf9d6db0789144f24f325af68c\",\"command\":[\"cluster-kube-apiserver-operator\",\"cert-regeneration-controller\"],\"args\":[\"--kubeconfig=/etc/kubernetes/static-pod-resources/configmaps/kube-apiserver-cert-syncer-kubeconfig/kubeconfig\",\"--namespace=$(POD_NAMESPACE)\",\"-v=2\"],\"env\":[{\"name\":\"POD_NAMESPACE\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.namespace\"}}},{\"name\":\"OPERATOR_IMAGE_VERSION\",\"value\":\"4.20.0-okd-scos.11\"}],\"resources\":{\"requests\":{\"cpu\":\"5m\",\"memory\":\"50Mi\"}},\"volumeMounts\":[{\"name\":\"resource-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-resources\"},{\"name\":\"tmp-dir\",\"mountPath\":\"/tmp\"}],\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}},{\"name\":\"kube-apiserver-insecure-readyz\",\"image\":\"quay.io/okd/scos-content@sha256:4691db53ffa1f2cb448123d1110edaab2a7bdcdf9d6db0789144f24f325af68c\",\"command\":[\"cluster-kube-apiserver-operator\",\"insecure-readyz\"],\"args\":[\"--insecure-port=6080\",\"--delegate-url=https://localhost:6443/readyz\"],\"ports\":[{\"containerPort\":6080}],\"resources\":{\"requests\":{\"cpu\":\"5m\",\"memory\":\"50Mi\"}},\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}},{\"name\":\"kube-apiserver-check-endpoints\",\"image\":\"quay.io/okd/scos-content@sha256:4691db53ffa1f2cb448123d1110edaab2a7bdcdf9d6db0789144f24f325af68c\",\"command\":[\"cluster-kube-apiserver-operator\",\"check-endpoints\"],\"args\":[\"--kubeconfig\",\"/etc/kubernetes/static-pod-certs/configmaps/check-endpoints-kubeconfig/kubeconfig\",\"--listen\",\"0.0.0.0:17697\",\"--namespace\",\"$(POD_NAMESPACE)\",\"--v\",\"2\"],\"ports\":[{\"name\":\"check-endpoints\",\"hostPort\":17697,\"containerPort\":17697,\"protocol\":\"TCP\"}],\"env\":[{\"name\":\"POD_NAME\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.name\"}}},{\"name\":\"POD_NAMESPACE\",\"valueFrom\":{\"fieldRef\":{\"fieldPath\":\"metadata.namespace\"}}}],\"resources\":{\"requests\":{\"cpu\":\"10m\",\"memory\":\"50Mi\"}},\"volumeMounts\":[{\"name\":\"resource-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-resources\"},{\"name\":\"cert-dir\",\"mountPath\":\"/etc/kubernetes/static-pod-certs\"},{\"name\":\"tmp-dir\",\"mountPath\":\"/tmp\"}],\"livenessProbe\":{\"httpGet\":{\"path\":\"healthz\",\"port\":17697,\"scheme\":\"HTTPS\"},\"initialDelaySeconds\":10,\"timeoutSeconds\":10},\"readinessProbe\":{\"httpGet\":{\"path\":\"healthz\",\"port\":17697,\"scheme\":\"HTTPS\"},\"initialDelaySeconds\":10,\"timeoutSeconds\":10},\"terminationMessagePolicy\":\"FallbackToLogsOnError\",\"imagePullPolicy\":\"IfNotPresent\",\"securityContext\":{\"readOnlyRootFilesystem\":true}}],\"terminationGracePeriodSeconds\":135,\"hostNetwork\":true,\"tolerations\":[{\"operator\":\"Exists\"}],\"priorityClassName\":\"system-node-critical\",\"priority\":2000001000},\"status\":{}}","openshift":{"cluster_id":"f5db0d9f-b567-4cc7-a5b1-1f0f4504e63d","sequence":1765584712068035256},"timestamp":"2025-12-10T23:56:13.605440411Z"}

You will need to use a command like jq to parse the lgos into a more human-readable format:

Our metrics are also working as well:

Learned Lessons

I realize just how outdated some of the Red Hat exams are. That said, I don't think I would know unless I actually applied what I learn outside of the classrom. Or ask around before I take the class.

At any rate, feel free to each out to me here if you have any thoughts or suggestions.